Faster Than Light Neutrinos

Date: Fri, 21 Oct 2011 01:06:21 -0700

Subject: neutrinos faster than speed of light?

From: bmcelligott4789@gmail.com

To: omniphysics@cosmosdawn.net

Hello! i've been reading on your website for a couple years now off and on, i really enjoy what you've done, the truth that you have found and helped so many others find for themselves. you are healing our concept of self and that is a commendable task.

i am writing now because i was wondering what you had to say about the neutrinos breaking lightspeed barrier, i can't remember if you have posted about it or not, did a quick search and it didn't find anything....anyways, would love to hear your take on things.

Have a wonderful day!

Brendan

Faster than light particles found, claim scientists

Particle physicists detect neutrinos travelling faster than light, a feat forbidden by Einstein's theory of special relativity

Ian Sample, science correspondent

guardian.co.uk,

Thursday 22 September 2011 23.32 BST

Neutrinos, like the ones above, have been detected travelling faster than light, say particle physicists.

Photograph: Dan Mccoy /Corbis

It is a concept that forms a cornerstone of our understanding of the universe and the concept of time – nothing can travel faster than the speed of light.

But now it seems that researchers working in one of the world's largest physics laboratories, under a mountain in central Italy, have recorded particles travelling at a speed that is supposedly forbidden by Einstein's theory of special relativity.

Scientists at the Gran Sasso facility will unveil evidence on Friday that raises the troubling possibility of a way to send information back in time, blurring the line between past and present and wreaking havoc with the fundamental principle of cause and effect.

They will announce the result at a special seminar at Cern – the European particle physics laboratory – timed to coincide with the publication of a research paper (pdf) describing the experiment.

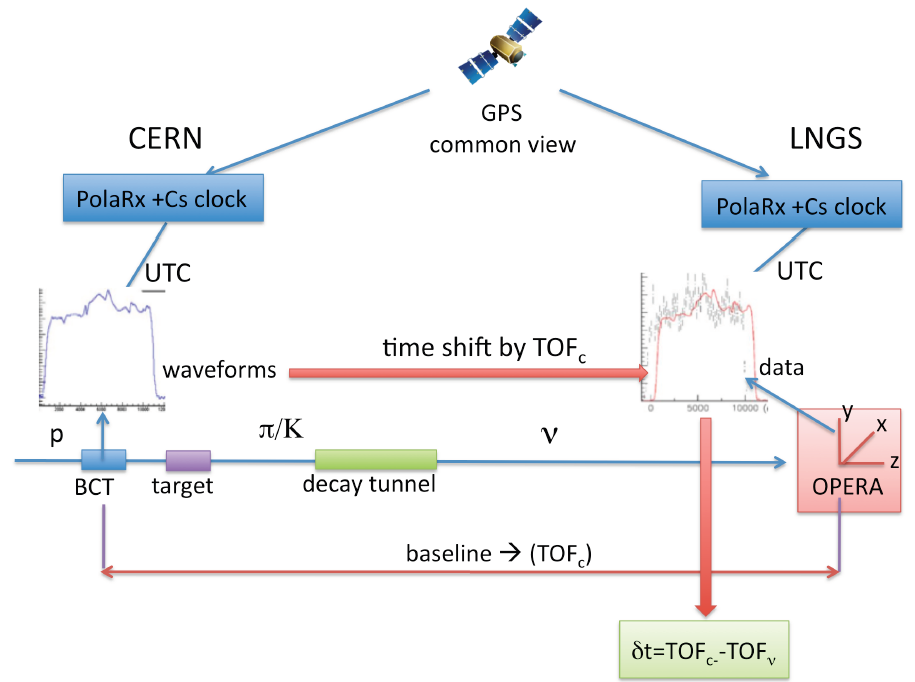

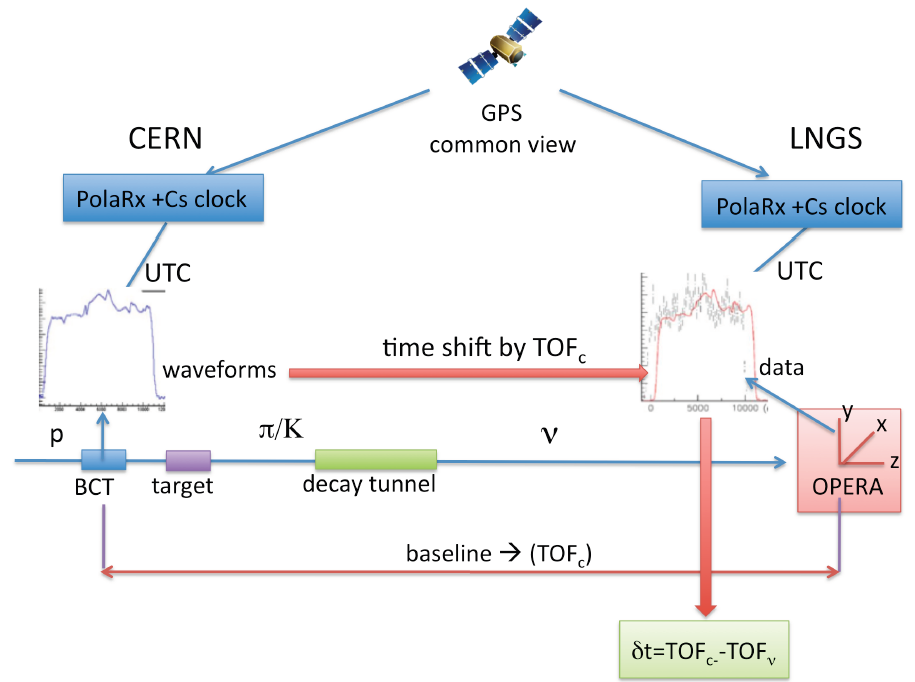

Researchers on the Opera (Oscillation Project with Emulsion-tRacking Apparatus) experiment recorded the arrival times of ghostly subatomic particles called neutrinos sent from Cern on a 730km journey through the Earth to the Gran Sasso lab.

The trip would take a beam of light 2.4 milliseconds to complete, but after running the experiment for three years and timing the arrival of 15,000 neutrinos, the scientists discovered that the particles arrived at Gran Sasso sixty billionths of a second earlier, with an error margin of plus or minus 10 billionths of a second.

The measurement amounts to the neutrinos travelling faster than the speed of light by a fraction of 20 parts per million. Since the speed of light is 299,792,458 metres per second, the neutrinos were evidently travelling at 299,798,454 metres per second.

The result is so unlikely that even the research team is being cautious with its interpretation. Physicists said they would be sceptical of the finding until other laboratories confirmed the result.

Antonio Ereditato, coordinator of the Opera collaboration, told the Guardian: "We are very much astonished by this result, but a result is never a discovery until other people confirm it.

"When you get such a result you want to make sure you made no mistakes, that there are no nasty things going on you didn't think of. We spent months and months doing checks and we have not been able to find any errors.

"If there is a problem, it must be a tough, nasty effect, because trivial things we are clever enough to rule out."

The Opera group said it hoped the physics community would scrutinise the result and help uncover any flaws in the measurement, or verify it with their own experiments.

Subir Sarkar, head of particle theory at Oxford University, said: "If this is proved to be true it would be a massive, massive event. It is something nobody was expecting.

"The constancy of the speed of light essentially underpins our understanding of space and time and causality, which is the fact that cause comes before effect."

The key point underlying causality is that the laws of physics as we know them dictate that information cannot be communicated faster than the speed of light in a vacuum, added Sarkar.

"Cause cannot come after effect and that is absolutely fundamental to our construction of the physical universe. If we do not have causality, we are buggered."

The Opera experiment detects neutrinos as they strike 150,000 "bricks" of photographic emulsion films interleaved with lead plates. The detector weighs a total of 1300 tonnes.

Despite the marginal increase on the speed of light observed by Ereditato's team, the result is intriguing because its statistical significance, the measure by which particle physics discoveries stand and fall, is so strong.

Physicists can claim a discovery if the chances of their result being a fluke of statistics are greater than five standard deviations, or less than one in a few million. The Gran Sasso team's result is six standard deviations.

Ereditato said the team would not claim a discovery because the result was so radical. "Whenever you touch something so fundamental, you have to be much more prudent," he said.

Alan Kostelecky, an expert in the possibility of faster-than-light processes at Indiana University, said that while physicists would await confirmation of the result, it was none the less exciting.

"It's such a dramatic result it would be difficult to accept without others replicating it, but there will be enormous interest in this," he told the Guardian.

One theory Kostelecky and his colleagues put forward in 1985 predicted that neutrinos could travel faster than the speed of light by interacting with an unknown field that lurks in the vacuum.

"With this kind of background, it is not necessarily the case that the limiting speed in nature is the speed of light," he said. "It might actually be the speed of neutrinos and light goes more slowly."

Neutrinos are mysterious particles. They have a minuscule mass, no electric charge, and pass through almost any material as though it was not there.

Kostelecky said that if the result was verified – a big if – it might pave the way to a grand theory that marries gravity with quantum mechanics, a puzzle that has defied physicists for nearly a century.

"If this is confirmed, this is the first evidence for a crack in the structure of physics as we know it that could provide a clue to constructing such a unified theory," Kostelecky said.

Heinrich Paes, a physicist at Dortmund University, has developed another theory that could explain the result. The neutrinos may be taking a shortcut through space-time, by travelling from Cern to Gran Sasso through extra dimensions. "That can make it look like a particle has gone faster than the speed of light when it hasn't," he said.

But Susan Cartwright, senior lecturer in particle astrophysics at Sheffield University, said: "Neutrino experimental results are not historically all that reliable, so the words 'don't hold your breath' do spring to mind when you hear very counter-intuitive results like this."

Teams at two experiments known as T2K in Japan and MINOS near Chicago in the US will now attempt to replicate the finding. The MINOS experiment saw hints of neutrinos moving at faster than the speed of light in 2007 but has yet to confirm them.

• This article was amended on 23 September 2011 to clarify the relevance of the speed of light to causality.

Dear Brendan!

The measured violation of the speed of light cosmic acceleration limit as 1 part in 50,000, if confirmed, simply illustrates the nature of the (anti)neutrinos in their role of the standard model of particle physics.

As you can discern in the accompanying descriptions, reproduced at:

http://www.thuban.spruz.com/forums/?page=post&fid=&lastp=1&id=E4C02335-4EC2-4BEC-8532-7D7CFFFDF4EC

the neutrinos are massless in their basic electron- and muon flavours, but experience a Higgsian Restmass Induction at the 0.052 electronvolt energy level and in the form of a ''Sterile Higgs Neutrino'. This constituted a verified experimental result at the Super Kamiokande neutrino detector beneath the Japanese Alps and was published worldwide on June 4th, 1998.

The (provisionally) measured increase in 'c' as the lightspeed invariant was the ratio 299,792,458/299,798,454=0.99998...

This can be translated to a decrease in the mass of the electron by this Higgsian neutrino mass induction in the range from: 2.969 - 3.021 eV, centred on 2.995 eV and differing by 0.052106 eV as the mass differential measured by the Kamiokande detector.

Using a relativistic electronmass at 0.18077c at the 8.5748 keV level of electric potential, (as in the derivation of the Higgs neutrinomass below and using cosmic calibrated units *), then decreases the relativistic electronmass from 9.290527155x10-31 kg* or 520,491.856 eV*, by 2.995 eV* (or so 5.4x10-36 kg*) as 520,491.856 eV* - 2.995 eV* = 520,488.861 eV*.

This compares as a trisected kernel (see the fractional electron charge distribution as the Leptonic Outer Ring) within the error margins to the variation in 'c' ratio as per the article:

520,488.861/520,491.856=0.999994=1/1.000006 as 3 parts in 521,000 or about 1 part in 174,000 and so about 30% of the lightspeed variance as the ratio 299,792,458/299,798,454=0.99998...and as 1 part in 50,000.

A detailed discussion of the subatomic quark-lepton charge distribution is found here:

The Charge Distribution of the Wavequarkian Standard Model - "Large proton Halo sparks devilish row"

http://www.thuban.spruz.com/forums/?page=post&id=8C67A51B-F47A-4646-A053-ADAD87677F63&fid=019788EF-0F1C-4E3D-B875-AB41438E63AD

The cosmic acceleration limit so is not violated by measuring electron-neutrino interactions, but the Higgs Bosonic Restmass Induction manifests in a subatomic internal charge redistribution, which reduces the energy of the interacting electron (or muon or tauon) interactions, which are always associated with their leptonic parental counterparts.

It so is the massless eigenstate of the 'Precursor Higgs Boson' as a 'Scalar RestmassPhoton' coupled to a colourcharged gauge photon of 'sourcesink antidradiation' as a Gauge Goldstone Boson (string or brane), which partitions this primordial selfstate into a trisected 'Scalar Higgs Neutrino Kernel' encompassed by an 'Inner Mesonic Ring' and an 'Outer Leptonic Ring', then defining a post Big Bang reconfiguration of the unified gauged particle states, commonly known as the Standard Model of Particle Physics.

Measuring (anti)neutrinos as travelling 'faster than the speed of light', so can be interpreted as a superbrane interaction in the multidimensional selfstate preceding the so called Big Bang, which was in fact preceded by an inflationary de Broglie wavematter phase epoch, where the 'speed of light' is by nature tachyonic as a wavematter speed VdB.

Data:

Schwarzschild-HubbleRadius:

RH=2GoMH/c2

Gravitational String-Oscillator(ZPE)Harmonic-Potential-Energy:

EPo=½hfo=hc/4πlP=½lPc2/Go=½mPc2=GomPMH/RH

for string-duality coupling between wormhole mass mP and closure Black Hole metric

MH=ρcriticalVSeed

Gravitational Acceleration per unit-stringmass:

ag= GoMH/RH2=GoMHc4/4Go2MH2=c4/4GoMH=c2/2RH=½Hoc

for a Nodal Hubble-Constant:

Ho=c/RH

Inflaton parameters:

De Broglie Phase-Velocity (VdB) and de Broglie Phase-Acceleration (AdB):

VdB=RHfo ~ 4.793x1056(m/s)* ~ 1.598x1048 c = 1022RH

AdB=RHfo2~ 1.438x1087 (m/s2)*

De Broglie Phase Speed: VdB=wavenlengthxfrequency=(h/mVGroup)x(mc²/h)=c²/VGroup>c for all VGroup< c

This then represents 'my take' on the recent 'faster than light' measurements at San Grasso and CERN according to Quantum Relativity.

Tonyblue

Hypersphere volumes and the mass of the Tau-neutrino

Consider the universe's thermodynamic expansion to proceed at an initializing time (and practically at lightspeed for the lightpath x=ct describing the hypersphere radii) to from a single spacetime quantum with a quantized toroidal volume 2π²rw³ and where rw is the characteristic wormhole radius for this basic building unit for a quantized universe (say in string parameters given in the Planck scale and its transformations).

At a time tG, say so 18.85 minutes later, the count of space time quanta can be said to be 9.677x10102 for a universal 'total hypersphere radius' of about rG=3.39x1011 meters and for a G-Hypersphere volume of so 7.69x1035cubic meters.

{This radius is about 2.3 Astronomical Units (AUs) and about the distance of the Asteroid Belt from the star Sol in a typical (our) solar system.}

This modelling of a mapping of the quantum-microscale onto the cosmological macroscale should now indicate the mapping of the wormhole scale onto the scale of the sun itself.

rw/RSun(i)=Re/rE for RSun(i)=rwrE/Re=1,971,030 meters. This gives an 'inner' solar core of diameter about 3.94x106 meters.

As the classical electron radius is quantized in the wormhole radius in the formulation Re=1010rw/360, rendering a finestructure for Planck's Constant as a 'superstring-parametric': h=rw/2Rec3; the 'outer' solar scale becomes RSun(o)=360RSun(i)=7.092x108 meters as the observed radius for the solar disk.

19 seconds later; a F-Hypersphere radius is about rF=3.45x1011 meters for a F-count of so 1.02x10103spacetime quanta.

We also define an E-Hypersphere radius at rE=3.44x1014 meters and an E-count of so 10112 to circumscribe this 'solar system' in so 230 AU.

We so have 4 hypersphere volumes, based on the singularity-unit and magnified via spacetime quantization in the hyperspheres defined in counters G, F and E. We consider these counters as somehow fundamental to the universe's expansion, serving as boundary conditions in some manner. As counters, those googol-numbers can be said to be defined algorithmically and independent on mensuration physics of any kind.

The mapping of the atomic nucleus onto the thermodynamic universe of the hyperspheres

Should we consider the universe to follow some kind of architectural blueprint; then we might attempt to use our counters to be isomorphic (same form or shape) in a one-to-one mapping between the macrocosmos and the microcosmos. So we define a quantum geometry for the nucleus in the simplest atom, say Hydrogen. The hydrogenic nucleus is a single proton of quark-structure udu and which we assign a quantum geometric template of Kernel-InnerRing-OuterRing (K-IR-OR), say in a simple model of concentricity.

We set the up-quarks (u) to become the 'smeared out core' in say a tripartition uuu so allowing a substructure for the down-quark (d) to be u+InnerRing. A down-quark so is a unitary ring coupled to a kernel-quark. The proton's quark-content so can be rewritten and without loss of any of the properties associated with the quantum conservation laws; as proton-> udu->uuu+IR=KKK+IR. We may now label the InnerRing as Mesonic and the OuterRing as Leptonic.

The OuterRing is so definitive for the strange quark in quantum geometric terms: s=u+OR.

A neutron's quark content so becomes neutron=dud=KIR.K.KIR with a 'hyperon resonance' in the lambda=sud=KOR.K.KIR and so allowing the neutron's beta decay to proceed in disassociation from a nucleus (where protons and neutrons bind in meson exchange); i.e. in the form of 'free neutrons'.

The neutron decays in the oscillation potential between the mesonic inner ring and the leptonic outer ring as the 'ground-energy' eigenstate.

There actually exist three uds-quark states which decay differently via strong, electromagnetic and weak decay rates in the uds (Sigmao Resonance); usd (Sigmao) and the sud (Lambdao) in increasing stability.

This quantum geometry then indicates the behaviour of the triple-uds decay from first principles, whereas the contemporary standard model does not, considering the u-d-s quark eigenstates to be quantum geometrically undifferentiated.

The nuclear interactions, both strong and weak are confined in a 'Magnetic Asymptotic Confinement Limit' coinciding with the Classical Electron radius Re=ke²/mec² and in a scale of so 3 Fermi or 2.8x10-15 meters. At a distance further away from this scale, the nuclear interaction strength vanishes rapidly.

The wavenature of the nucleus is given in the Compton-Radius Rc=h/2πmc with m the mass of the nucleus, say a proton; the latter so having Rc=2x10-16 meters or so 0.2 fermi.

The wave-matter (after de Broglie generalising wavespeed vdB from c in Rcc) then relates the classical electron radius as the 'confinement limit' to the Compton scale in the electromagnetic finestructure constant in Re=Alpha.Rc.

The extension to the Hydrogen-Atom is obtained in the expression Re=Alpha².RBohr1 for the first Bohr-Radius as the 'ground-energy' of so 13.7 eV at a scale of so 10-11 to 10-10 meters (Angstroems).

These 'facts of measurements' of the standard models now allow our quantum geometric correspondences to assume cosmological significance in their isomorphic mapping. We denote the OuterRing as the classical electron radius and introduce the InnerRing as a mesonic scale contained within the geometry of the proton and all other elementary baryonic- and hadronic particles.

Firstly, we define a mean macro-mesonic radius as: rM=½(rF+rG)~ 3.42x1011 meters and set the macro-leptonic radius to rE=3.44x1014 meters.

Secondly, we map the macroscale onto the microscale, say in the simple proportionality relation, using

(de)capitalised symbols: Re/Rm=rE/rM.

We can so solve for the micro-mesonic scale Rm=Re.rM/rE ~ 2.76x10-18 meters.

So reducing the apparent measured 'size' of a proton in a factor about about 1000 gives the scale of the subnuclear mesonic interaction, say the strong interaction coupling by pions.

The Higgsian Scalar-Neutrino

The (anti)neutrinos are part of the electron mass in a decoupling process between the kernel and the rings. Neutrino mass is so not cosmologically significant and cannot be utilized in 'missing mass' models'.

We may define the kernel-scale as that of the singular spacetime-quantum unit itself, namely as the wormhole radius rw=10-22/2π meters.

Before the decoupling between kernel and rings, the kernel-energy can be said to be strong-weakly coupled or unified to encompass the gauge-gluon of the strong interaction and the gauge-weakon of the weak interaction defined in a coupling between the OuterRing and the Kernel and bypassing the mesonic InnerRing.

So for matter, a W-Minus (weakon) must consist of a coupled lepton part, yet linking to the strong interaction via the kernel part. If now the colour-charge of the gluon transmutates into a 'neutrino-colour-charge'; then this decoupling will not only define the mechanics for the strong-weak nuclear unification coupling; but also the energy transformation of the gauge-colour charge into the gauge-lepton charge.

There are precisely 8 gluonic transitive energy permutation eigenstates between a 'radiative-additive' Planck energy in W(hite)=E=hf and an 'inertial-subtractive' Einstein energy in B(lack)=E=mc2, which describe the baryonic- and hyperonic 'quark-sectors' in: mc2=BBB, BBW, WBB, BWB, WBW, BWW, WWB and WWW=hf.

The permutations are cyclic and not linearly commutative. For mesons (quark-antiquark eigenstates), the permutations are BB, BW, WB and WW in the SU(2) and SU(3) Unitary Symmetries.

So generally, we may state, that the gluon is unfied with a weakon before decoupling; this decoupling 'materialising' energy in the form of mass, namely the mass of the measured 'weak-interaction-bosons' of the standard model (W- for charged matter; W+ for charged antimatter and Zo for neutral mass-currents say).

Experiment shows, that a W- decays into spin-aligned electron-antineutrino or muon-antineutrino or tauon-antineutrino pairings under the conservation laws for momentum and energy.

So, using our quantum geometry, we realise, that the weakly decoupled electron must represent the OuterRing, and just as shown in the analysis of QED (Quantum-Electro-Dynamics). Then it can be inferred, that the Electron's Antineutrino represents a transformed and materialised gluon via its colourcharge, now decoupled from the kernel.

Then the OuterRing contracts (say along its magnetoaxis defining its asymptotic confinement); in effect 'shrinking the electron' in its inertial and charge- properties to its experimentally measured 'point-particle-size'. Here we define this process as a mapping between the Electronic wavelength 2πRe and the wormhole perimeter λw=2πrw.

But in this process of the 'shrinking' classical electron radius towards the gluonic kernel (say); the mesonic ring will be encountered and it is there, that any mass-inductions should occur to differentiate a massless lepton gauge-eigenstate from that manifested by the weakon precursors.

{Note: Here the W- inducing a lefthanded neutron to decay weakly into a lefthanded proton, a lefthanded electron and a righthanded antineutrino. Only lefthanded particles decay weakly in CP-parity-symmetry violation, effected by neutrino-gauge definitions from first principles}.

This so defines a neutrino-oscillation potential at the InnerRing-Boundary. Using our proportions and assigning any neutrino-masses mυ as part of the electronmass me, gives the following proportionality as the mass eigenvalue of the Tau-neutrino:

mυ=meλw.rE/(2πrMRe) ~ 5.4x10-36 kg or 3.0 eV.

So we have derived, from first principles, a (anti)neutrinomass eigenstate of 3 eV.

This confirms the Mainz, Germany Result as the upper limit for neutrino masses resulting from ordinary Beta-Decay and indicates the importance of the primordial beta-decay for the cosmogenesis and the isomorphic scale mappings stated above.

The hypersphere intersection of the G- and F-count of the thermodynamic expansion of the mass-parametric universe so induces a neutrino-mass of 3 eV at the 2.76x10-18 meter marker.

The more precise G-F differential in terms of eigenenergy is 0.052 eV as the mass-eigenvalue for the Higgs-(Anti)neutrino (which is scalar of 0-spin and constituent of the so called Higgs Boson as the kernel-Eigenstate). This has been experimentally verified in the Super-Kamiokande (Japan) neutrino experiments published in 1998 and in subsequent neutrino experiments around the globe, say Sudbury, KamLAND, Dubna, MinibooNE and MINOS.

This Higgs-Neutrino-Induction is 'twinned' meaning that this energy can be related to the energy of so termed 'slow- or thermal neutrons' in a coupled energy of so twice 0.0253 eV for a thermal equilibrium at so 20° Celsius and a rms-standard-speed of so 2200 m/s from the Maxwell statistical distributions for the kinematics.

Neutrinomasses

The Electron-(Anti)Neutrino is massless as base-neutrinoic weakon eigenstate.

The Muon-(Anti)Neutrino is also massless as base-neutrinoic weakon eigenstate.

The Tauon-(Anti)Neutrino is not massless with inertial eigenstate meaned at 3.0 eV.

The weakon kernel-eigenstates are 'squared' or doubled (2x2=2+2) in comparison with the gluonic-eigenstate (one can denote the colourcharges as (R²G²B²)[½] and as (RGB)[1] respectively say and with the [] bracket denoting gauge-spin and RGB meaning colours Red-Green-Blue).

The scalar Higgs-(Anti)Neutrino becomes then defined in: (R4G4B4)[0].

The twinned neutrino state so becomes MANIFESTED in a coupling of the scalar Higgs-Neutrino with a massless base neutrino in a (R6G6B6)[0+½]) mass-induction template.

The Higgs-Neutrino is bosonic and so not subject to the Pauli Exclusion Principle; but quantized in the form of the FG-differential of the 0.052 Higgs-Restmass-Induction.

Subsequently all experimentally observed neutrino-oscillations should show a stepwise energy induction in units of the Higgs-neutrino mass of 0.052 eV. This was the case in the Super-Kamiokande experiments; and which was interpreted as a mass-differential between the muonic and tauonic neutrinoic forms.

Sterile neutrino back from the dead

17:28 22 June 2010 by David Shiga - Magazine Issue 2766

[size=18]A ghostly particle given up for dead is showing signs of life.[/size]

Not only could this "sterile" neutrino be the stuff of dark matter, thought to make up the bulk of our universe, it might also help to explain how an excess of matter over antimatter arose in our universe.

Neutrinos are subatomic particles that rarely interact with ordinary matter. They are known to come in three flavours – electron, muon and tau – with each able to spontaneously transform into another.

In the 1990s, results from the Liquid Scintillator Neutrino Detector (LSND) at the Los Alamos National Laboratory in New Mexico suggested there might be a fourth flavour: a "sterile" neutrino that is even less inclined to interact with ordinary matter than the others.

Hasty dismissal

Sterile neutrinos would be big news because the only way to detect them would be by their gravitational influence – just the sort of feature needed to explain dark matter.

Then in 2007 came the disheartening news that the Mini Booster Neutrino Experiment (MiniBooNE, pictured) at the Fermi National Accelerator Laboratory in Batavia, Illinois, had failed to find evidence of them.

But perhaps sterile neutrinos were dismissed too soon. While MiniBooNE used neutrinos to look for the sterile neutrino,

LSND used antineutrinos – the antimatter equivalent. Although antineutrinos should behave exactly the same as neutrinos, just to be safe, the MiniBooNE team decided to repeat the experiment – this time with antineutrinos.

Weird excess

Lo and behold, the team saw muon antineutrinos turning into electron antineutrinos at a higher rate than expected – just like at LSND. MiniBooNE member Richard Van de Water reported the result at a neutrino conference in Athens, Greece, on 14 June.

The excess could be because muon antineutrinos turn into sterile neutrinos before becoming electron antineutrinos, says Fermilab physicist Dan Hooper, who is not part of MiniBooNE. "This is very, very weird," he adds.

Although it could be a statistical fluke, Hooper suggests that both MiniBooNE results could be explained if antineutrinos can change into sterile neutrinos but neutrinos cannot – an unexpected difference in behaviour.

The finding would fit nicely with research from the Main Injector Neutrino Oscillation Search, or MINOS, also at Fermilab, which, the same day, announced subtle differences in the oscillation behaviour of neutrinos and antineutrinos.

Antimatter and matter are supposed to behave like mirror versions of each other, but flaws in this symmetry could explain how our universe ended up with more matter.

Tonyblue

Aside this multidimensional hyperphysics; a more 'relativistic' lower dimensional explanation is also logistically feasible.

Tony,

A Netherlands group claims to have solved the faster than light neutrino problem by applying special relativity to the GPS satellites:

http://www.kurzweilai.net/faster-than-light-neutrino-puzzle-claimed-solved-by-special-relativity

As a result the neutrinos are not faster than light.

Richard (posting in quantumrelativity yahoo forum October 22nd, 2011)

Thank you Richard for this link and contribution.

Faster-than-light neutrino puzzle claimed solved by special relativity

October 14, 2011 by Editor

(Credit: CERN)

The relativistic motion of clocks on board GPS satellites exactly accounts for the superluminal effect in the OPERA experiment, says physicist Ronald van Elburg at the University of Groningen in the Netherlands, The Physics arXiv Blog reports.

“From the perspective of the clock, the detector is moving towards the source and consequently the distance travelled by the particles as observed from the clock is shorter,” says van Elburg. By this he means shorter than the distance measured in the reference frame on the ground. The OPERA team overlooks this because it assumes the clocks are on the ground not in orbit.

Van Elburg calculates that it should cause the neutrinos to arrive 32 nanoseconds early. But this must be doubled because the same error occurs at each end of the experiment. So the total correction is 64 nanoseconds, almost exactly what the OPERA team observed.

Ref.: Ronald A.J. van Elburg, Times Of Flight Between A Source And A Detector Observed From A GPS Satellite, arxiv.org/abs/1110.2685:

Topics: Physics/Cosmology

Date: Fri, 21 Oct 2011 01:06:21 -0700

Subject: neutrinos faster than speed of light?

From: bmcelligott4789@gmail.com

To: omniphysics@cosmosdawn.net

Hello! i've been reading on your website for a couple years now off and on, i really enjoy what you've done, the truth that you have found and helped so many others find for themselves. you are healing our concept of self and that is a commendable task.

i am writing now because i was wondering what you had to say about the neutrinos breaking lightspeed barrier, i can't remember if you have posted about it or not, did a quick search and it didn't find anything....anyways, would love to hear your take on things.

Have a wonderful day!

Brendan

Faster than light particles found, claim scientists

Particle physicists detect neutrinos travelling faster than light, a feat forbidden by Einstein's theory of special relativity

Ian Sample, science correspondent

guardian.co.uk,

Thursday 22 September 2011 23.32 BST

Neutrinos, like the ones above, have been detected travelling faster than light, say particle physicists.

Photograph: Dan Mccoy /Corbis

It is a concept that forms a cornerstone of our understanding of the universe and the concept of time – nothing can travel faster than the speed of light.

But now it seems that researchers working in one of the world's largest physics laboratories, under a mountain in central Italy, have recorded particles travelling at a speed that is supposedly forbidden by Einstein's theory of special relativity.

Scientists at the Gran Sasso facility will unveil evidence on Friday that raises the troubling possibility of a way to send information back in time, blurring the line between past and present and wreaking havoc with the fundamental principle of cause and effect.

They will announce the result at a special seminar at Cern – the European particle physics laboratory – timed to coincide with the publication of a research paper (pdf) describing the experiment.

Researchers on the Opera (Oscillation Project with Emulsion-tRacking Apparatus) experiment recorded the arrival times of ghostly subatomic particles called neutrinos sent from Cern on a 730km journey through the Earth to the Gran Sasso lab.

The trip would take a beam of light 2.4 milliseconds to complete, but after running the experiment for three years and timing the arrival of 15,000 neutrinos, the scientists discovered that the particles arrived at Gran Sasso sixty billionths of a second earlier, with an error margin of plus or minus 10 billionths of a second.

The measurement amounts to the neutrinos travelling faster than the speed of light by a fraction of 20 parts per million. Since the speed of light is 299,792,458 metres per second, the neutrinos were evidently travelling at 299,798,454 metres per second.

The result is so unlikely that even the research team is being cautious with its interpretation. Physicists said they would be sceptical of the finding until other laboratories confirmed the result.

Antonio Ereditato, coordinator of the Opera collaboration, told the Guardian: "We are very much astonished by this result, but a result is never a discovery until other people confirm it.

"When you get such a result you want to make sure you made no mistakes, that there are no nasty things going on you didn't think of. We spent months and months doing checks and we have not been able to find any errors.

"If there is a problem, it must be a tough, nasty effect, because trivial things we are clever enough to rule out."

The Opera group said it hoped the physics community would scrutinise the result and help uncover any flaws in the measurement, or verify it with their own experiments.

Subir Sarkar, head of particle theory at Oxford University, said: "If this is proved to be true it would be a massive, massive event. It is something nobody was expecting.

"The constancy of the speed of light essentially underpins our understanding of space and time and causality, which is the fact that cause comes before effect."

The key point underlying causality is that the laws of physics as we know them dictate that information cannot be communicated faster than the speed of light in a vacuum, added Sarkar.

"Cause cannot come after effect and that is absolutely fundamental to our construction of the physical universe. If we do not have causality, we are buggered."

The Opera experiment detects neutrinos as they strike 150,000 "bricks" of photographic emulsion films interleaved with lead plates. The detector weighs a total of 1300 tonnes.

Despite the marginal increase on the speed of light observed by Ereditato's team, the result is intriguing because its statistical significance, the measure by which particle physics discoveries stand and fall, is so strong.

Physicists can claim a discovery if the chances of their result being a fluke of statistics are greater than five standard deviations, or less than one in a few million. The Gran Sasso team's result is six standard deviations.

Ereditato said the team would not claim a discovery because the result was so radical. "Whenever you touch something so fundamental, you have to be much more prudent," he said.

Alan Kostelecky, an expert in the possibility of faster-than-light processes at Indiana University, said that while physicists would await confirmation of the result, it was none the less exciting.

"It's such a dramatic result it would be difficult to accept without others replicating it, but there will be enormous interest in this," he told the Guardian.

One theory Kostelecky and his colleagues put forward in 1985 predicted that neutrinos could travel faster than the speed of light by interacting with an unknown field that lurks in the vacuum.

"With this kind of background, it is not necessarily the case that the limiting speed in nature is the speed of light," he said. "It might actually be the speed of neutrinos and light goes more slowly."

Neutrinos are mysterious particles. They have a minuscule mass, no electric charge, and pass through almost any material as though it was not there.

Kostelecky said that if the result was verified – a big if – it might pave the way to a grand theory that marries gravity with quantum mechanics, a puzzle that has defied physicists for nearly a century.

"If this is confirmed, this is the first evidence for a crack in the structure of physics as we know it that could provide a clue to constructing such a unified theory," Kostelecky said.

Heinrich Paes, a physicist at Dortmund University, has developed another theory that could explain the result. The neutrinos may be taking a shortcut through space-time, by travelling from Cern to Gran Sasso through extra dimensions. "That can make it look like a particle has gone faster than the speed of light when it hasn't," he said.

But Susan Cartwright, senior lecturer in particle astrophysics at Sheffield University, said: "Neutrino experimental results are not historically all that reliable, so the words 'don't hold your breath' do spring to mind when you hear very counter-intuitive results like this."

Teams at two experiments known as T2K in Japan and MINOS near Chicago in the US will now attempt to replicate the finding. The MINOS experiment saw hints of neutrinos moving at faster than the speed of light in 2007 but has yet to confirm them.

• This article was amended on 23 September 2011 to clarify the relevance of the speed of light to causality.

Dear Brendan!

The measured violation of the speed of light cosmic acceleration limit as 1 part in 50,000, if confirmed, simply illustrates the nature of the (anti)neutrinos in their role of the standard model of particle physics.

As you can discern in the accompanying descriptions, reproduced at:

http://www.thuban.spruz.com/forums/?page=post&fid=&lastp=1&id=E4C02335-4EC2-4BEC-8532-7D7CFFFDF4EC

the neutrinos are massless in their basic electron- and muon flavours, but experience a Higgsian Restmass Induction at the 0.052 electronvolt energy level and in the form of a ''Sterile Higgs Neutrino'. This constituted a verified experimental result at the Super Kamiokande neutrino detector beneath the Japanese Alps and was published worldwide on June 4th, 1998.

The (provisionally) measured increase in 'c' as the lightspeed invariant was the ratio 299,792,458/299,798,454=0.99998...

This can be translated to a decrease in the mass of the electron by this Higgsian neutrino mass induction in the range from: 2.969 - 3.021 eV, centred on 2.995 eV and differing by 0.052106 eV as the mass differential measured by the Kamiokande detector.

Using a relativistic electronmass at 0.18077c at the 8.5748 keV level of electric potential, (as in the derivation of the Higgs neutrinomass below and using cosmic calibrated units *), then decreases the relativistic electronmass from 9.290527155x10-31 kg* or 520,491.856 eV*, by 2.995 eV* (or so 5.4x10-36 kg*) as 520,491.856 eV* - 2.995 eV* = 520,488.861 eV*.

This compares as a trisected kernel (see the fractional electron charge distribution as the Leptonic Outer Ring) within the error margins to the variation in 'c' ratio as per the article:

520,488.861/520,491.856=0.999994=1/1.000006 as 3 parts in 521,000 or about 1 part in 174,000 and so about 30% of the lightspeed variance as the ratio 299,792,458/299,798,454=0.99998...and as 1 part in 50,000.

A detailed discussion of the subatomic quark-lepton charge distribution is found here:

The Charge Distribution of the Wavequarkian Standard Model - "Large proton Halo sparks devilish row"

http://www.thuban.spruz.com/forums/?page=post&id=8C67A51B-F47A-4646-A053-ADAD87677F63&fid=019788EF-0F1C-4E3D-B875-AB41438E63AD

The cosmic acceleration limit so is not violated by measuring electron-neutrino interactions, but the Higgs Bosonic Restmass Induction manifests in a subatomic internal charge redistribution, which reduces the energy of the interacting electron (or muon or tauon) interactions, which are always associated with their leptonic parental counterparts.

It so is the massless eigenstate of the 'Precursor Higgs Boson' as a 'Scalar RestmassPhoton' coupled to a colourcharged gauge photon of 'sourcesink antidradiation' as a Gauge Goldstone Boson (string or brane), which partitions this primordial selfstate into a trisected 'Scalar Higgs Neutrino Kernel' encompassed by an 'Inner Mesonic Ring' and an 'Outer Leptonic Ring', then defining a post Big Bang reconfiguration of the unified gauged particle states, commonly known as the Standard Model of Particle Physics.

Measuring (anti)neutrinos as travelling 'faster than the speed of light', so can be interpreted as a superbrane interaction in the multidimensional selfstate preceding the so called Big Bang, which was in fact preceded by an inflationary de Broglie wavematter phase epoch, where the 'speed of light' is by nature tachyonic as a wavematter speed VdB.

Data:

Schwarzschild-HubbleRadius:

RH=2GoMH/c2

Gravitational String-Oscillator(ZPE)Harmonic-Potential-Energy:

EPo=½hfo=hc/4πlP=½lPc2/Go=½mPc2=GomPMH/RH

for string-duality coupling between wormhole mass mP and closure Black Hole metric

MH=ρcriticalVSeed

Gravitational Acceleration per unit-stringmass:

ag= GoMH/RH2=GoMHc4/4Go2MH2=c4/4GoMH=c2/2RH=½Hoc

for a Nodal Hubble-Constant:

Ho=c/RH

Inflaton parameters:

De Broglie Phase-Velocity (VdB) and de Broglie Phase-Acceleration (AdB):

VdB=RHfo ~ 4.793x1056(m/s)* ~ 1.598x1048 c = 1022RH

AdB=RHfo2~ 1.438x1087 (m/s2)*

De Broglie Phase Speed: VdB=wavenlengthxfrequency=(h/mVGroup)x(mc²/h)=c²/VGroup>c for all VGroup< c

This then represents 'my take' on the recent 'faster than light' measurements at San Grasso and CERN according to Quantum Relativity.

Tonyblue

Hypersphere volumes and the mass of the Tau-neutrino

Consider the universe's thermodynamic expansion to proceed at an initializing time (and practically at lightspeed for the lightpath x=ct describing the hypersphere radii) to from a single spacetime quantum with a quantized toroidal volume 2π²rw³ and where rw is the characteristic wormhole radius for this basic building unit for a quantized universe (say in string parameters given in the Planck scale and its transformations).

At a time tG, say so 18.85 minutes later, the count of space time quanta can be said to be 9.677x10102 for a universal 'total hypersphere radius' of about rG=3.39x1011 meters and for a G-Hypersphere volume of so 7.69x1035cubic meters.

{This radius is about 2.3 Astronomical Units (AUs) and about the distance of the Asteroid Belt from the star Sol in a typical (our) solar system.}

This modelling of a mapping of the quantum-microscale onto the cosmological macroscale should now indicate the mapping of the wormhole scale onto the scale of the sun itself.

rw/RSun(i)=Re/rE for RSun(i)=rwrE/Re=1,971,030 meters. This gives an 'inner' solar core of diameter about 3.94x106 meters.

As the classical electron radius is quantized in the wormhole radius in the formulation Re=1010rw/360, rendering a finestructure for Planck's Constant as a 'superstring-parametric': h=rw/2Rec3; the 'outer' solar scale becomes RSun(o)=360RSun(i)=7.092x108 meters as the observed radius for the solar disk.

19 seconds later; a F-Hypersphere radius is about rF=3.45x1011 meters for a F-count of so 1.02x10103spacetime quanta.

We also define an E-Hypersphere radius at rE=3.44x1014 meters and an E-count of so 10112 to circumscribe this 'solar system' in so 230 AU.

We so have 4 hypersphere volumes, based on the singularity-unit and magnified via spacetime quantization in the hyperspheres defined in counters G, F and E. We consider these counters as somehow fundamental to the universe's expansion, serving as boundary conditions in some manner. As counters, those googol-numbers can be said to be defined algorithmically and independent on mensuration physics of any kind.

The mapping of the atomic nucleus onto the thermodynamic universe of the hyperspheres

Should we consider the universe to follow some kind of architectural blueprint; then we might attempt to use our counters to be isomorphic (same form or shape) in a one-to-one mapping between the macrocosmos and the microcosmos. So we define a quantum geometry for the nucleus in the simplest atom, say Hydrogen. The hydrogenic nucleus is a single proton of quark-structure udu and which we assign a quantum geometric template of Kernel-InnerRing-OuterRing (K-IR-OR), say in a simple model of concentricity.

We set the up-quarks (u) to become the 'smeared out core' in say a tripartition uuu so allowing a substructure for the down-quark (d) to be u+InnerRing. A down-quark so is a unitary ring coupled to a kernel-quark. The proton's quark-content so can be rewritten and without loss of any of the properties associated with the quantum conservation laws; as proton-> udu->uuu+IR=KKK+IR. We may now label the InnerRing as Mesonic and the OuterRing as Leptonic.

The OuterRing is so definitive for the strange quark in quantum geometric terms: s=u+OR.

A neutron's quark content so becomes neutron=dud=KIR.K.KIR with a 'hyperon resonance' in the lambda=sud=KOR.K.KIR and so allowing the neutron's beta decay to proceed in disassociation from a nucleus (where protons and neutrons bind in meson exchange); i.e. in the form of 'free neutrons'.

The neutron decays in the oscillation potential between the mesonic inner ring and the leptonic outer ring as the 'ground-energy' eigenstate.

There actually exist three uds-quark states which decay differently via strong, electromagnetic and weak decay rates in the uds (Sigmao Resonance); usd (Sigmao) and the sud (Lambdao) in increasing stability.

This quantum geometry then indicates the behaviour of the triple-uds decay from first principles, whereas the contemporary standard model does not, considering the u-d-s quark eigenstates to be quantum geometrically undifferentiated.

The nuclear interactions, both strong and weak are confined in a 'Magnetic Asymptotic Confinement Limit' coinciding with the Classical Electron radius Re=ke²/mec² and in a scale of so 3 Fermi or 2.8x10-15 meters. At a distance further away from this scale, the nuclear interaction strength vanishes rapidly.

The wavenature of the nucleus is given in the Compton-Radius Rc=h/2πmc with m the mass of the nucleus, say a proton; the latter so having Rc=2x10-16 meters or so 0.2 fermi.

The wave-matter (after de Broglie generalising wavespeed vdB from c in Rcc) then relates the classical electron radius as the 'confinement limit' to the Compton scale in the electromagnetic finestructure constant in Re=Alpha.Rc.

The extension to the Hydrogen-Atom is obtained in the expression Re=Alpha².RBohr1 for the first Bohr-Radius as the 'ground-energy' of so 13.7 eV at a scale of so 10-11 to 10-10 meters (Angstroems).

These 'facts of measurements' of the standard models now allow our quantum geometric correspondences to assume cosmological significance in their isomorphic mapping. We denote the OuterRing as the classical electron radius and introduce the InnerRing as a mesonic scale contained within the geometry of the proton and all other elementary baryonic- and hadronic particles.

Firstly, we define a mean macro-mesonic radius as: rM=½(rF+rG)~ 3.42x1011 meters and set the macro-leptonic radius to rE=3.44x1014 meters.

Secondly, we map the macroscale onto the microscale, say in the simple proportionality relation, using

(de)capitalised symbols: Re/Rm=rE/rM.

We can so solve for the micro-mesonic scale Rm=Re.rM/rE ~ 2.76x10-18 meters.

So reducing the apparent measured 'size' of a proton in a factor about about 1000 gives the scale of the subnuclear mesonic interaction, say the strong interaction coupling by pions.

The Higgsian Scalar-Neutrino

The (anti)neutrinos are part of the electron mass in a decoupling process between the kernel and the rings. Neutrino mass is so not cosmologically significant and cannot be utilized in 'missing mass' models'.

We may define the kernel-scale as that of the singular spacetime-quantum unit itself, namely as the wormhole radius rw=10-22/2π meters.

Before the decoupling between kernel and rings, the kernel-energy can be said to be strong-weakly coupled or unified to encompass the gauge-gluon of the strong interaction and the gauge-weakon of the weak interaction defined in a coupling between the OuterRing and the Kernel and bypassing the mesonic InnerRing.

So for matter, a W-Minus (weakon) must consist of a coupled lepton part, yet linking to the strong interaction via the kernel part. If now the colour-charge of the gluon transmutates into a 'neutrino-colour-charge'; then this decoupling will not only define the mechanics for the strong-weak nuclear unification coupling; but also the energy transformation of the gauge-colour charge into the gauge-lepton charge.

There are precisely 8 gluonic transitive energy permutation eigenstates between a 'radiative-additive' Planck energy in W(hite)=E=hf and an 'inertial-subtractive' Einstein energy in B(lack)=E=mc2, which describe the baryonic- and hyperonic 'quark-sectors' in: mc2=BBB, BBW, WBB, BWB, WBW, BWW, WWB and WWW=hf.

The permutations are cyclic and not linearly commutative. For mesons (quark-antiquark eigenstates), the permutations are BB, BW, WB and WW in the SU(2) and SU(3) Unitary Symmetries.

So generally, we may state, that the gluon is unfied with a weakon before decoupling; this decoupling 'materialising' energy in the form of mass, namely the mass of the measured 'weak-interaction-bosons' of the standard model (W- for charged matter; W+ for charged antimatter and Zo for neutral mass-currents say).

Experiment shows, that a W- decays into spin-aligned electron-antineutrino or muon-antineutrino or tauon-antineutrino pairings under the conservation laws for momentum and energy.

So, using our quantum geometry, we realise, that the weakly decoupled electron must represent the OuterRing, and just as shown in the analysis of QED (Quantum-Electro-Dynamics). Then it can be inferred, that the Electron's Antineutrino represents a transformed and materialised gluon via its colourcharge, now decoupled from the kernel.

Then the OuterRing contracts (say along its magnetoaxis defining its asymptotic confinement); in effect 'shrinking the electron' in its inertial and charge- properties to its experimentally measured 'point-particle-size'. Here we define this process as a mapping between the Electronic wavelength 2πRe and the wormhole perimeter λw=2πrw.

But in this process of the 'shrinking' classical electron radius towards the gluonic kernel (say); the mesonic ring will be encountered and it is there, that any mass-inductions should occur to differentiate a massless lepton gauge-eigenstate from that manifested by the weakon precursors.

{Note: Here the W- inducing a lefthanded neutron to decay weakly into a lefthanded proton, a lefthanded electron and a righthanded antineutrino. Only lefthanded particles decay weakly in CP-parity-symmetry violation, effected by neutrino-gauge definitions from first principles}.

This so defines a neutrino-oscillation potential at the InnerRing-Boundary. Using our proportions and assigning any neutrino-masses mυ as part of the electronmass me, gives the following proportionality as the mass eigenvalue of the Tau-neutrino:

mυ=meλw.rE/(2πrMRe) ~ 5.4x10-36 kg or 3.0 eV.

So we have derived, from first principles, a (anti)neutrinomass eigenstate of 3 eV.

This confirms the Mainz, Germany Result as the upper limit for neutrino masses resulting from ordinary Beta-Decay and indicates the importance of the primordial beta-decay for the cosmogenesis and the isomorphic scale mappings stated above.

The hypersphere intersection of the G- and F-count of the thermodynamic expansion of the mass-parametric universe so induces a neutrino-mass of 3 eV at the 2.76x10-18 meter marker.

The more precise G-F differential in terms of eigenenergy is 0.052 eV as the mass-eigenvalue for the Higgs-(Anti)neutrino (which is scalar of 0-spin and constituent of the so called Higgs Boson as the kernel-Eigenstate). This has been experimentally verified in the Super-Kamiokande (Japan) neutrino experiments published in 1998 and in subsequent neutrino experiments around the globe, say Sudbury, KamLAND, Dubna, MinibooNE and MINOS.

This Higgs-Neutrino-Induction is 'twinned' meaning that this energy can be related to the energy of so termed 'slow- or thermal neutrons' in a coupled energy of so twice 0.0253 eV for a thermal equilibrium at so 20° Celsius and a rms-standard-speed of so 2200 m/s from the Maxwell statistical distributions for the kinematics.

Neutrinomasses

The Electron-(Anti)Neutrino is massless as base-neutrinoic weakon eigenstate.

The Muon-(Anti)Neutrino is also massless as base-neutrinoic weakon eigenstate.

The Tauon-(Anti)Neutrino is not massless with inertial eigenstate meaned at 3.0 eV.

The weakon kernel-eigenstates are 'squared' or doubled (2x2=2+2) in comparison with the gluonic-eigenstate (one can denote the colourcharges as (R²G²B²)[½] and as (RGB)[1] respectively say and with the [] bracket denoting gauge-spin and RGB meaning colours Red-Green-Blue).

The scalar Higgs-(Anti)Neutrino becomes then defined in: (R4G4B4)[0].

The twinned neutrino state so becomes MANIFESTED in a coupling of the scalar Higgs-Neutrino with a massless base neutrino in a (R6G6B6)[0+½]) mass-induction template.

The Higgs-Neutrino is bosonic and so not subject to the Pauli Exclusion Principle; but quantized in the form of the FG-differential of the 0.052 Higgs-Restmass-Induction.

Subsequently all experimentally observed neutrino-oscillations should show a stepwise energy induction in units of the Higgs-neutrino mass of 0.052 eV. This was the case in the Super-Kamiokande experiments; and which was interpreted as a mass-differential between the muonic and tauonic neutrinoic forms.

Sterile neutrino back from the dead

17:28 22 June 2010 by David Shiga - Magazine Issue 2766

[size=18]A ghostly particle given up for dead is showing signs of life.[/size]

Not only could this "sterile" neutrino be the stuff of dark matter, thought to make up the bulk of our universe, it might also help to explain how an excess of matter over antimatter arose in our universe.

Neutrinos are subatomic particles that rarely interact with ordinary matter. They are known to come in three flavours – electron, muon and tau – with each able to spontaneously transform into another.

In the 1990s, results from the Liquid Scintillator Neutrino Detector (LSND) at the Los Alamos National Laboratory in New Mexico suggested there might be a fourth flavour: a "sterile" neutrino that is even less inclined to interact with ordinary matter than the others.

Hasty dismissal

Sterile neutrinos would be big news because the only way to detect them would be by their gravitational influence – just the sort of feature needed to explain dark matter.

Then in 2007 came the disheartening news that the Mini Booster Neutrino Experiment (MiniBooNE, pictured) at the Fermi National Accelerator Laboratory in Batavia, Illinois, had failed to find evidence of them.

But perhaps sterile neutrinos were dismissed too soon. While MiniBooNE used neutrinos to look for the sterile neutrino,

LSND used antineutrinos – the antimatter equivalent. Although antineutrinos should behave exactly the same as neutrinos, just to be safe, the MiniBooNE team decided to repeat the experiment – this time with antineutrinos.

Weird excess

Lo and behold, the team saw muon antineutrinos turning into electron antineutrinos at a higher rate than expected – just like at LSND. MiniBooNE member Richard Van de Water reported the result at a neutrino conference in Athens, Greece, on 14 June.

The excess could be because muon antineutrinos turn into sterile neutrinos before becoming electron antineutrinos, says Fermilab physicist Dan Hooper, who is not part of MiniBooNE. "This is very, very weird," he adds.

Although it could be a statistical fluke, Hooper suggests that both MiniBooNE results could be explained if antineutrinos can change into sterile neutrinos but neutrinos cannot – an unexpected difference in behaviour.

The finding would fit nicely with research from the Main Injector Neutrino Oscillation Search, or MINOS, also at Fermilab, which, the same day, announced subtle differences in the oscillation behaviour of neutrinos and antineutrinos.

Antimatter and matter are supposed to behave like mirror versions of each other, but flaws in this symmetry could explain how our universe ended up with more matter.

Tonyblue

Aside this multidimensional hyperphysics; a more 'relativistic' lower dimensional explanation is also logistically feasible.

Tony,

A Netherlands group claims to have solved the faster than light neutrino problem by applying special relativity to the GPS satellites:

http://www.kurzweilai.net/faster-than-light-neutrino-puzzle-claimed-solved-by-special-relativity

As a result the neutrinos are not faster than light.

Richard (posting in quantumrelativity yahoo forum October 22nd, 2011)

Thank you Richard for this link and contribution.

Faster-than-light neutrino puzzle claimed solved by special relativity

October 14, 2011 by Editor

(Credit: CERN)

The relativistic motion of clocks on board GPS satellites exactly accounts for the superluminal effect in the OPERA experiment, says physicist Ronald van Elburg at the University of Groningen in the Netherlands, The Physics arXiv Blog reports.

“From the perspective of the clock, the detector is moving towards the source and consequently the distance travelled by the particles as observed from the clock is shorter,” says van Elburg. By this he means shorter than the distance measured in the reference frame on the ground. The OPERA team overlooks this because it assumes the clocks are on the ground not in orbit.

Van Elburg calculates that it should cause the neutrinos to arrive 32 nanoseconds early. But this must be doubled because the same error occurs at each end of the experiment. So the total correction is 64 nanoseconds, almost exactly what the OPERA team observed.

Ref.: Ronald A.J. van Elburg, Times Of Flight Between A Source And A Detector Observed From A GPS Satellite, arxiv.org/abs/1110.2685:

Topics: Physics/Cosmology

Last edited by Didymos on Sun Oct 23, 2011 7:56 am; edited 5 times in total